August 18, 2018

It has been some time since my last post, about 3/4 of a year. Since then, much has happened, and I will try to fill in the gaps, so to speak. When I wrote the previous post, I had recieved my Turtlebot 2 and sensors and was in the process of setting up everything. I completed that fairly quickly, and so moved on to the first major goal: autonomous navigation.

Autonomous navigation

Luckily, the ROS (Robot Operating System) navigation stack provides a great solution to this extremely common obstacle in robotics. The navigation stack allows fully autonomous navigation of known maps and adapts dynamically to newly discovered obstacles. Awsome.

To allow the navigation stack to do its thing, however, it needs, as I said, a map. A map is a simple representation of an area in which obstacles and navigable terrain, which is often encoded in pictures using colors, where black usually signals obstacles and other colors indicate unobstructed areas. There are many algorithms which can produce a map when given various sensor readings, such as a laserscanner (like my RP-LiDAR A2) and odomertry (information on the rotation of the robot's wheels).

The standard algorithm employed by ROS is called gamapping, which uses both laserscan data als well as odometry information to create the map. It preforms pretty well and is quite stable, which is why I have been using it, although I am considering switching to the newer Google Cartographer. Cartographer promises more precise maps, combination of 2D and 3D maps and many further benifits, however the installation has not gone as smoothly as expected, which is why I have tenporarily put the switch on ice.

The mapping algotihm, once active, will record and process sensor data as you munually steer the robot. There exist exploration algorithms in which the robot will create the map by itself, using existing data to predict what unkown areas will look like and navigating based on that, correcting the assumptions with data along the way.

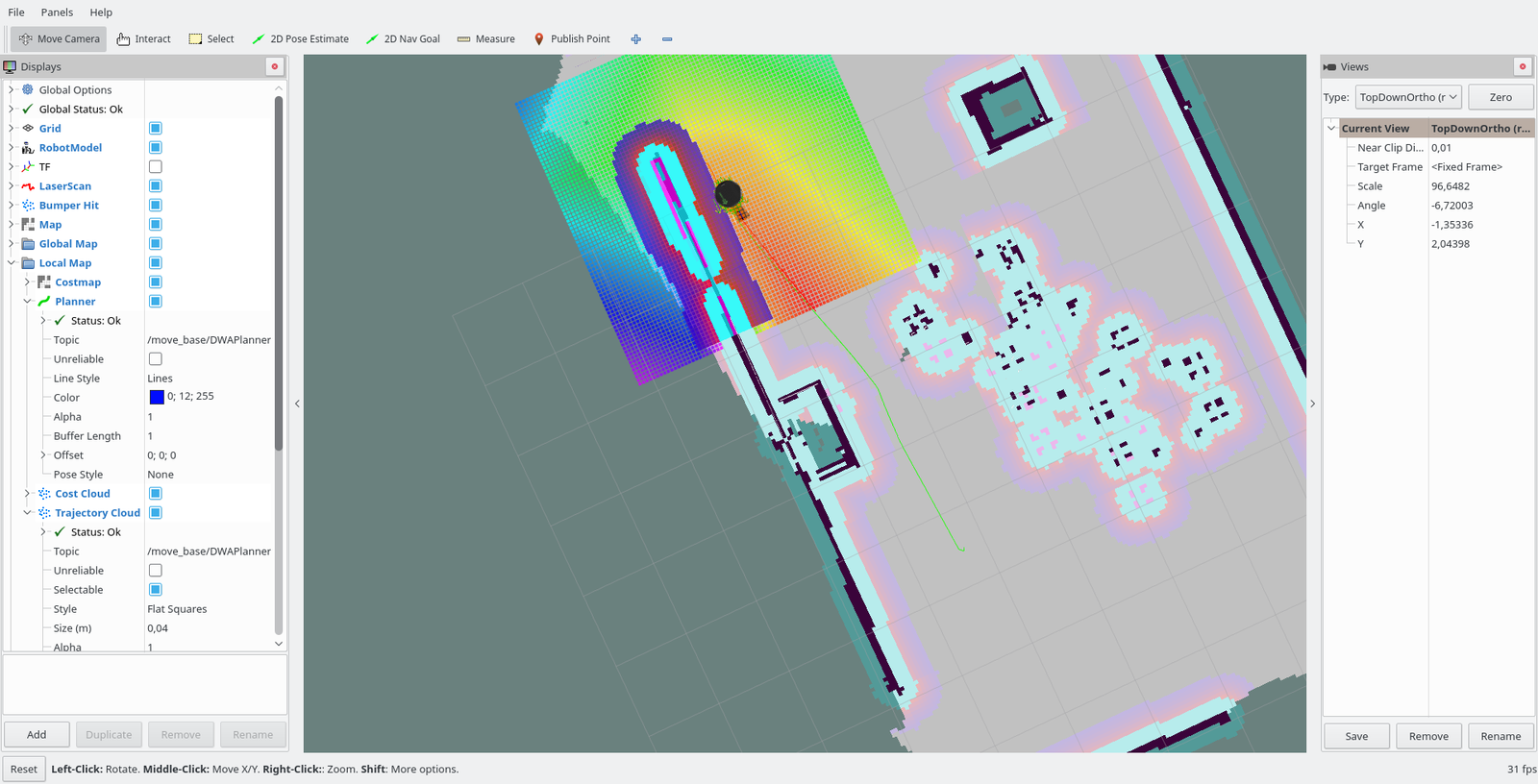

Once the map has been completed and saved, the navigation stack comes into play. With a few simple commands, it will load any created map and wait until it is given a navgoal via a topic, sent either from rviz or any other node. When it recieves a navgoal, the navigation stack will plan a path to that goal using the map and its own pathfinding algorithms. The stack will then start issuing velocity commands to the move base, navigating the robot along the plotted course. The pathfinding algorithm tries to avoid narrow pathways, always ensuring some safety margin. Should the robot encounter obstacles not recorded on the map, it instantly plots a new path to avoid the obstacle while not wasting too much time.

In my case, though I did have initial issues getting gmapping and the navigation stack to accept my LiDAR, a problem with a relatively simple solution, the community quickly helped me to solve this. Once that problem was resolved, I had little difficulty in setting up gmappping and the navigation stack, though gmapping has proven to not be completely reliable, occasionaly creating extremely inacurate and distorted maps.

The Turtlebot navigating using a known map

The Turtlebot navigating using a known map

More Presentations

Taking a break from the technical aspects from the project, I also had the oppertunity to present my project in front of the minister president of Hessen, the state I live in, as well as prominant board members of companies such as Fraport, Linde Group, Merck and many more. Presentations such as this really help shape your project, as it forces you to condense it into a short and understandable form. Forgetting all the crazy ideas I had about what the project could be, I had to focus on what it was, and what it really needed to become.

Progress and next steps

Currently, there is a lot going on, especially when it comes to the Seek Thermal thermal imaging camera, which I am currently working on to provide the robot with fire detection and localisation systems. Instead of dragging this post out for another few paragraphs explaining methods and algorithms that I may change tomorrow, I will simply leave it at this: I am actively working on it, and will report once I have a (semi-)working solution.